Overview

Recently, I've been really into studying English...

- (2024-05-31) The Road to Eiken Pre-1 Grade 2024-05

- (2024-06-28) The Road to Eiken Pre-1 Grade 2024-06

- (2024-07-31) The Road to Eiken Pre-1 Grade 2024-07

One of the pillars supporting this craze is my favorite vocabulary book, Distinction 2000. Let's talk about it.

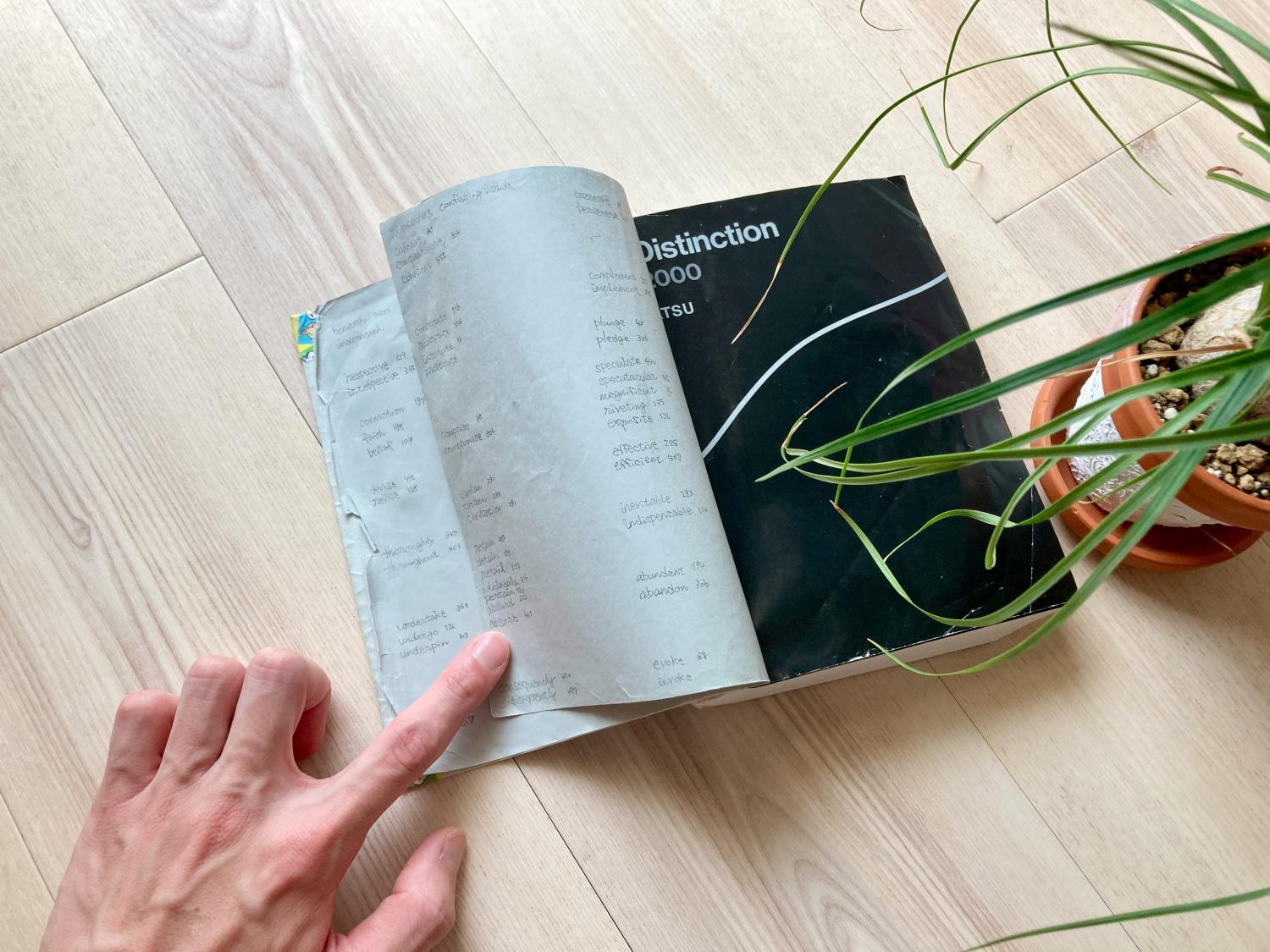

These scribbles are my personal "easily confused words set." Let me show you some examples. This kind of collection is fun.

- Similar sets

- timid

- intimidate

- Sets with almost the same meaning but different nuances

- controversial

- contentious

What’s Good About It

- It’s structured in a way that makes “repeated exposure” easy.

- Each chapter specifies “the chapters to review next.” By following this, you can see all the chapters six times each.

- When memorizing, “repeated exposure” is the most effective. The fact that it’s easy to do this is a good point.

- Instead of just listing words, each chapter provides longer example sentences that include all the words.

- For “repeated exposure,” reading example sentences is easier than looking at lists of words.

- When you see a word somewhere else, it’s easier to remember it as “I saw it in that context” rather than “I saw it in that vocabulary book,” right?

I used to have a habit of memorizing difficult words by using mnemonics and other tricks. But there are an infinite number of words, so doing that isn’t cost-effective. We don’t remember Japanese words by trying to memorize them. We remember them because we see them many times. For English words, it’s more natural and healthier to remember them because we’ve seen them many times.

The Audio Data is a Bit Inconvenient

There’s audio data for the “example sentences including the words” that I praised above.

I’d like to play the example sentences from all 40 chapters randomly, but each example sentence is divided into four paragraphs, and the audio data is recorded per paragraph. Because of this, if you play them randomly, the flow of the story gets interrupted. So, I used ffmpeg to combine the paragraph-by-paragraph audio data into audio data for each chapter.

ffmpeg -i "concat:Ch.01 Immigration #academic1.mp3|Ch.01 Immigration #academic2.mp3|Ch.01 Immigration #academic3.mp3|Ch.01 Immigration #academic4.mp3" \

-acodec copy "./output/Ch.01 Immigration #academic.mp3"

rm "Ch.01 Immigration #academic1.mp3" \

"Ch.01 Immigration #academic2.mp3" \

"Ch.01 Immigration #academic3.mp3" \

"Ch.01 Immigration #academic4.mp3"

I prepared this ↑ for 40 chapters and started listening to them.

# Combine files like these

Ch.01 Immigration #academic1.mp3

Ch.01 Immigration #academic2.mp3

Ch.01 Immigration #academic3.mp3

Ch.01 Immigration #academic4.mp3

# Into a single file like this

Ch.01 Immigration #academic.mp3